Jerikan+Ansible: a configuration management system for network

ex-Blade Network Team

There are many resources for network automation with Ansible. Most of them only expose the first steps or limit themselves to a narrow scope. They give no clue on how to expand from that. Real network environments may be large, versatile, heterogeneous, and filled with exceptions. The lack of real-world examples for Ansible deployments, unlike Puppet and SaltStack, leads many teams to build brittle and incomplete automation solutions.

We have released under an open-source license our attempt to tackle this problem:

- Jerikan, a tool to build configuration files from a single source of truth and Jinja2 templates, along with its integration into the GitLab CI system;

- an Ansible playbook to deploy these configuration files on network devices; and

- the configuration data and the templates for our, now defunct, datacenters in San Francisco and South Korea, covering many vendors (Facebook Wedge 100, Dell S4048 and S6010, Juniper QFX 5110, Juniper QFX 10002, Cisco ASR 9001, Cisco Catalyst 2960, Opengear console servers, and Linux), and many functionalities (provisioning, BGP-to-the-host routing, edge routing, out-of-band network, DNS configuration, integration with NetBox and IRRs).

Here is a quick demo to configure a new peering:

This work is the collective effort of Cédric Hascoët, Jean-Christophe Legatte, Loïc Pailhas, Sébastien Hurtel, Tchadel Icard, and Vincent Bernat. We are the network team of Blade, a French company operating Shadow, a cloud-computing product. In May 2021, our company was bought by Octave Klaba and the infrastructure is being transferred to OVHcloud, saving Shadow as a product, but making our team redundant.

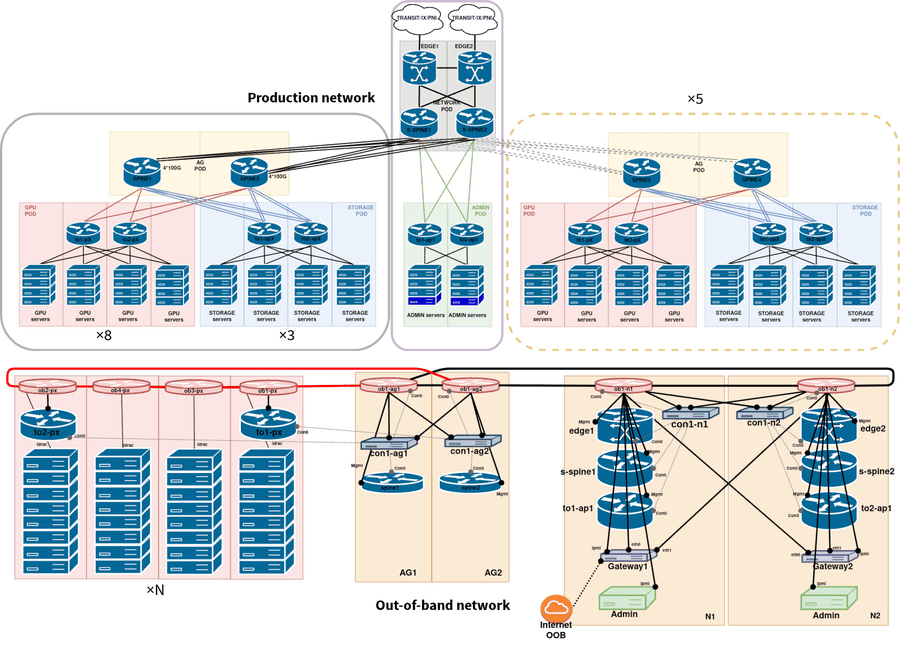

Our network was around 800 devices, spanning over 10 datacenters with more than 2.5 Tbps of available egress bandwidth. The released material is therefore a substantial example of managing a medium-scale network using Ansible. We have left out the handling of our legacy datacenters to make the final result more readable while keeping enough material to not turn it into a trivial example.

Jerikan#

The first component is Jerikan. As input, it takes a list of devices, configuration data, templates, and validation scripts. It generates a set of configuration files for each device. Ansible could cover this task, but it has the following limitations:

If you want to follow the examples, you only need to have Docker and Docker Compose installed. Clone the repository and you are ready!

Source of truth#

We use YAML files, versioned with Git, as the single source of truth instead of using a database, like NetBox, or a mix of a database and text files. This provides many advantages:

- anyone can use their preferred text editor;

- the team prepares changes in branches;

- the team reviews changes using merge requests;

- the merge requests expose the changes to the generated configuration files;

- rollback to a previous state is easy; and

- it is fast.

Update (2021-11)

See “Git as a source of truth for network automation” for more detailed arguments about choosing Git instead of NetBox.

The first file is devices.yaml. It contains the

device list. The second file is classifier.yaml.

It defines a scope for each device. A scope is a set of keys and

values. It is used in templates and to look up data associated with a

device.

$ ./run-jerikan scope to1-p1.sk1.blade-group.net continent: apac environment: prod groups: - tor - tor-bgp - tor-bgp-compute host: to1-p1.sk1 location: sk1 member: '1' model: dell-s4048 os: cumulus pod: '1' shorthost: to1-p1

The device name is matched against a list of regular expressions and

the scope is extended by the result of each match. For

to1-p1.sk1.blade-group.net, the following subset of

classifier.yaml defines its scope:

matchers: - '^(([^.]*)\..*)\.blade-group\.net': environment: prod host: '\1' shorthost: '\2' - '\.(sk1)\.': location: '\1' continent: apac - '^to([12])-[as]?p(\d+)\.': member: '\1' pod: '\2' - '^to[12]-p\d+\.': groups: - tor - tor-bgp - tor-bgp-compute - '^to[12]-(p|ap)\d+\.sk1\.': os: cumulus model: dell-s4048

The third file is searchpaths.py. It describes

which directories to search for a variable. A Python function provides

a list of paths to look up in data/ for a given scope. Here

is a simplified version:2

def searchpaths(scope): paths = [ "host/{scope[location]}/{scope[shorthost]}", "location/{scope[location]}", "os/{scope[os]}-{scope[model]}", "os/{scope[os]}", 'common' ] for idx in range(len(paths)): try: paths[idx] = paths[idx].format(scope=scope) except KeyError: paths[idx] = None return [path for path in paths if path]

With this definition, the data for to1-p1.sk1.blade-group.net is

looked up in the following paths:

$ ./run-jerikan scope to1-p1.sk1.blade-group.net […] Search paths: host/sk1/to1-p1 location/sk1 os/cumulus-dell-s4048 os/cumulus common

Variables are scoped using a namespace that should be specified when doing a lookup. We use the following ones:

systemfor accounts, DNS, syslog servers, …topologyfor ports, interfaces, IP addresses, subnets, …bgpfor BGP configurationbuildfor templates and validation scriptsappsfor application variables

When looking up for a variable in a given namespace, Jerikan looks

for a YAML file named after the namespace in each directory in the

search paths. For example, if we look up a variable for

to1-p1.sk1.blade-group.net in the bgp namespace, the following

YAML files are processed: host/sk1/to1-p1/bgp.yaml,

location/sk1/bgp.yaml, os/cumulus-dell-s4048/bgp.yaml,

os/cumulus/bgp.yaml, and common/bgp.yaml. The search stops at the

first match.

The schema.yaml file allows us to override this

behavior by asking to merge dictionaries and arrays across all

matching files.3 Here is an excerpt of this file for the topology

namespace:

system: users: merge: hash sampling: merge: hash ansible-vars: merge: hash netbox: merge: hash

The last feature of the source of truth is the ability to use Jinja2

templates for keys and values by prefixing them with “~”:

# In data/os/junos/system.yaml netbox: manufacturer: Juniper model: "~{{ model|upper }}" # In data/groups/tor-bgp-compute/system.yaml netbox: role: net_tor_gpu_switch

Looking up for netbox in the system namespace for

to1-p2.ussfo03.blade-group.net yields the following result:

$ ./run-jerikan scope to1-p2.ussfo03.blade-group.net continent: us environment: prod groups: - tor - tor-bgp - tor-bgp-compute host: to1-p2.ussfo03 location: ussfo03 member: '1' model: qfx5110-48s os: junos pod: '2' shorthost: to1-p2 […] Search paths: […] groups/tor-bgp-compute […] os/junos common $ ./run-jerikan lookup to1-p2.ussfo03.blade-group.net system netbox manufacturer: Juniper model: QFX5110-48S role: net_tor_gpu_switch

This also works for structured data:

# In groups/adm-gateway/topology.yaml interface-rescue: address: "~{{ lookup('topology', 'addresses').rescue }}" up: - "~ip route add default via {{ lookup('topology', 'addresses').rescue|ipaddr('first_usable') }} table rescue" - "~ip rule add from {{ lookup('topology', 'addresses').rescue|ipaddr('address') }} table rescue priority 10" # In groups/adm-gateway-sk1/topology.yaml interfaces: ens1f0: "~{{ lookup('topology', 'interface-rescue') }}"

This yields the following result:

$ ./run-jerikan lookup gateway1.sk1.blade-group.net topology interfaces […] ens1f0: address: 121.78.242.10/29 up: - ip route add default via 121.78.242.9 table rescue - ip rule add from 121.78.242.10 table rescue priority 10

When putting data in the source of truth, we use the following rules:

- Don’t repeat yourself.

- Put the data in the most specific place without breaking the first rule.

- Use templates with parsimony, mostly to help with the previous rules.

- Restrict the data model to what is needed for your use case.

The first rule is important. For example, when specifying IP addresses for a point-to-point link, only specify one side and deduce the other value in the templates. The last rule means you do not need to mimic a BGP YANG model to specify BGP peers and policies:

peers: transit: cogent: asn: 174 remote: - 38.140.30.233 - 2001:550:2:B::1F9:1 specific-import: - name: ATT-US as-path: ".*7018$" lp-delta: 50 ix-sfmix: rs-sfmix: monitored: true asn: 63055 remote: - 206.197.187.253 - 206.197.187.254 - 2001:504:30::ba06:3055:1 - 2001:504:30::ba06:3055:2 blizzard: asn: 57976 remote: - 206.197.187.42 - 2001:504:30::ba05:7976:1 irr: AS-BLIZZARD

Templates#

The list of templates to compile for each device is stored in the source

of truth, under the build namespace:

$ ./run-jerikan lookup edge1.ussfo03.blade-group.net build templates data.yaml: data.j2 config.txt: junos/main.j2 config-base.txt: junos/base.j2 config-irr.txt: junos/irr.j2 $ ./run-jerikan lookup to1-p1.ussfo03.blade-group.net build templates data.yaml: data.j2 config.txt: cumulus/main.j2 frr.conf: cumulus/frr.j2 interfaces.conf: cumulus/interfaces.j2 ports.conf: cumulus/ports.j2 dhcpd.conf: cumulus/dhcp.j2 default-isc-dhcp: cumulus/default-isc-dhcp.j2 authorized_keys: cumulus/authorized-keys.j2 motd: linux/motd.j2 acl.rules: cumulus/acl.j2 rsyslog.conf: cumulus/rsyslog.conf.j2

Templates are using Jinja2. This is the same engine used in

Ansible. Jerikan ships some custom filters but also reuse some of

the useful filters from Ansible, notably

ipaddr. Here is an excerpt of

templates/junos/base.j2 to configure DNS

and NTP servers on Juniper devices:

system { ntp { {% for ntp in lookup("system", "ntp") %} server {{ ntp }}; {% endfor %} } name-server { {% for dns in lookup("system", "dns") %} {{ dns }}; {% endfor %} } }

The equivalent template for Cisco IOS XR is:

{% for dns in lookup('system', 'dns') %} domain vrf VRF-MANAGEMENT name-server {{ dns }} {% endfor %} ! {% for syslog in lookup('system', 'syslog') %} logging {{ syslog }} vrf VRF-MANAGEMENT {% endfor %} !

There are three helper functions provided:

devices()returns the list of devices matching a set of conditions on the scope. For example,devices("location==ussfo03", "groups==tor-bgp")returns the list of devices in San Francisco in thetor-bgpgroup. You can also omit the operator if you want the specified value to be equal to the one in the local scope. For example,devices("location")returns devices in the current location.lookup()does a key lookup. It takes the namespace, the key, and optionally, a device name. If not provided, the current device is assumed.scope()returns the scope of the provided device.

Here is how you would define iBGP sessions between edge devices in the same location:

{% for neighbor in devices("location", "groups==edge") if neighbor != device %} {% for address in lookup("topology", "addresses", neighbor).loopback|tolist %} protocols bgp group IPV{{ address|ipv }}-EDGES-IBGP { neighbor {{ address }} { description "IPv{{ address|ipv }}: iBGP to {{ neighbor }}"; } } {% endfor %} {% endfor %}

We also have a global key-value store to save information to be

reused in another template or device. This is quite useful to

automatically build DNS records. First, “capture” the IP address

inserted into a template with store() as a filter:

interface Loopback0 description 'Loopback:' {% for address in lookup('topology', 'addresses').loopback|tolist %} ipv{{ address|ipv }} address {{ address|store('addresses', 'Loopback0')|ipaddr('cidr') }} {% endfor %} !

Then, reuse it later to build DNS records by iterating over store():5

{% for device, ip, interface in store('addresses') %} {% set interface = interface|replace('/', '-')|replace('.', '-')|replace(':', '-') %} {% set name = '{}.{}'.format(interface|lower, device) %} {{ name }}. IN {{ 'A' if ip|ipv4 else 'AAAA' }} {{ ip|ipaddr('address') }} {% endfor %}

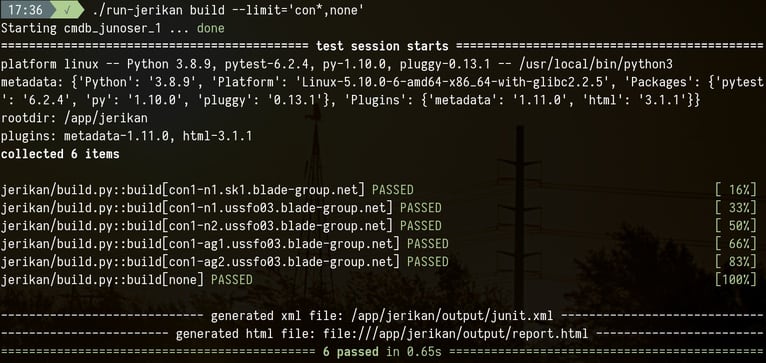

Templates are compiled locally with ./run-jerikan build. The

--limit argument restricts the devices to generate configuration

files for. Build is not done in parallel because a template may depend

on the data collected by another template. Currently, it takes 1

minute to compile around 3000 files spanning over 800 devices.

When an error occurs, a detailed traceback is displayed, including the template name, the line number and the value of all visible variables. This is a major time-saver compared to Ansible!

templates/opengear/config.j2:15: in top-level template code config.interfaces.{{ interface }}.netmask {{ infos.adddress | ipaddr("netmask") }} continent = 'us' device = 'con1-ag2.ussfo03.blade-group.net' environment = 'prod' host = 'con1-ag2.ussfo03' infos = {'address': '172.30.24.19/21'} interface = 'wan' location = 'ussfo03' loop = <LoopContext 1/2> member = '2' model = 'cm7132-2-dac' os = 'opengear' shorthost = 'con1-ag2' _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ value = JerkianUndefined, query = 'netmask', version = False, alias = 'ipaddr' […] # Check if value is a list and parse each element if isinstance(value, (list, tuple, types.GeneratorType)): _ret = [ipaddr(element, str(query), version) for element in value] return [item for item in _ret if item] > elif not value or value is True: E jinja2.exceptions.UndefinedError: 'dict object' has no attribute 'adddress'

We don’t have general-purpose rules when writing templates. Like for

the source of truth, there is no need to create generic templates able

to produce any BGP configuration. There is a balance to be found

between readability and avoiding duplication. Templates can become

scary and complex: sometimes, it’s better to write a filter or a

function in jerikan/jinja.py. Mastering

Jinja2 is a good investment. Take time to browse through our

templates as some of them show interesting features.

Checks#

Optionally, each configuration file can be validated by a script in

the checks/ directory. Jerikan looks up the key checks

in the build namespace to know which checks to run:

$ ./run-jerikan lookup edge1.ussfo03.blade-group.net build checks - description: Juniper configuration file syntax check script: checks/junoser cache: input: config.txt output: config-set.txt - description: check YAML data script: checks/data.yaml cache: data.yaml

In the above example, checks/junoser is executed if there is a

change to the generated config.txt file. It also outputs a

transformed version of the configuration file which is easier to

understand when using diff. Junoser checks a Junos configuration

file using Juniper’s XML schema definition for Netconf.6 On

error, Jerikan displays:

jerikan/build.py:127: RuntimeError -------------- Captured syntax check with Junoser call -------------- P: checks/junoser edge2.ussfo03.blade-group.net C: /app/jerikan O: E: Invalid syntax: set system syslog archive size 10m files 10 word-readable S: 1

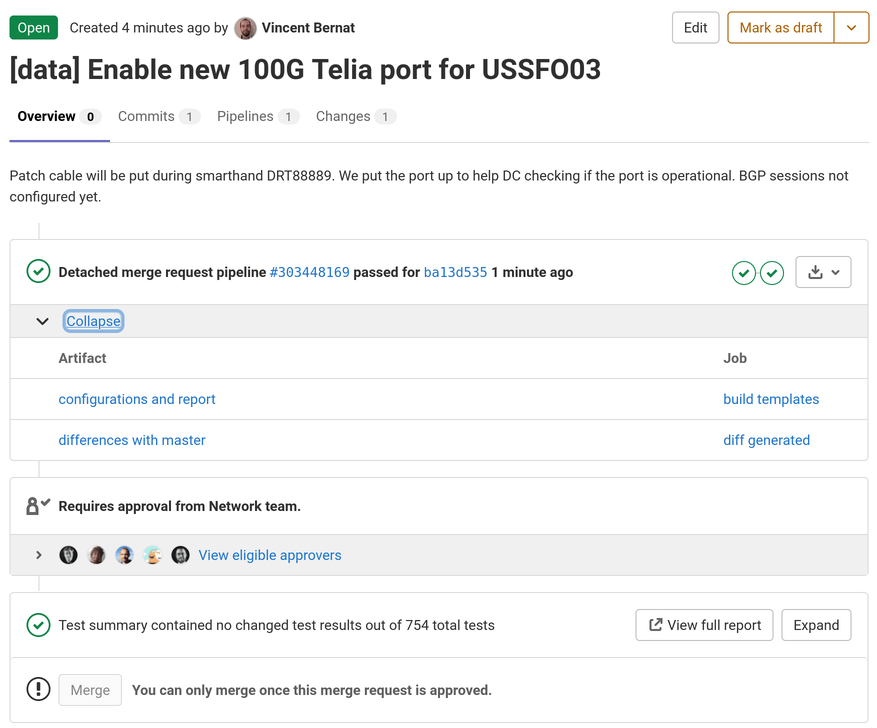

Integration into GitLab CI#

The next step is to compile the templates using a CI. As we are using

GitLab, Jerikan ships with a .gitlab-ci.yml

file. When we need to make a change, we create a dedicated branch and

a merge request. GitLab compiles the templates using the same

environment we use on our laptops and store them as an artifact.

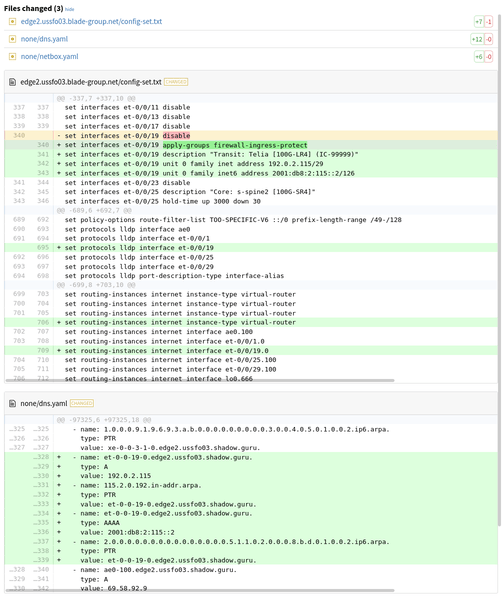

Before approving the merge request, another team member looks at the changes in data and templates but also the differences for the generated configuration files:

Ansible#

After Jerikan has built the configuration files, Ansible takes over. It is also packaged as a Docker image to avoid the trouble to maintain the right Python virtual environment and ensure everyone is using the same versions.

Inventory#

Jerikan has generated an inventory file. It contains all the managed devices, the variables defined for each of them and the groups converted to Ansible groups:

ob1-n1.sk1.blade-group.net ansible_host=172.29.15.12 ansible_user=blade ansible_connection=network_cli ansible_network_os=ios ob2-n1.sk1.blade-group.net ansible_host=172.29.15.13 ansible_user=blade ansible_connection=network_cli ansible_network_os=ios ob1-n1.ussfo03.blade-group.net ansible_host=172.29.15.12 ansible_user=blade ansible_connection=network_cli ansible_network_os=ios none ansible_connection=local [oob] ob1-n1.sk1.blade-group.net ob2-n1.sk1.blade-group.net ob1-n1.ussfo03.blade-group.net [os-ios] ob1-n1.sk1.blade-group.net ob2-n1.sk1.blade-group.net ob1-n1.ussfo03.blade-group.net [model-c2960s] ob1-n1.sk1.blade-group.net ob2-n1.sk1.blade-group.net ob1-n1.ussfo03.blade-group.net [location-sk1] ob1-n1.sk1.blade-group.net ob2-n1.sk1.blade-group.net [location-ussfo03] ob1-n1.ussfo03.blade-group.net [in-sync] ob1-n1.sk1.blade-group.net ob2-n1.sk1.blade-group.net ob1-n1.ussfo03.blade-group.net none

in-sync is a special group for devices which configuration should

match the golden configuration. Daily and unattended, Ansible should

be able to push configurations to this group. The mid-term goal is to

cover all devices.

none is a special device for tasks not related to a specific host.

This includes synchronizing NetBox, IRR objects, and the DNS,

updating the RPKI, and building the geofeed files.

Playbook#

We use a single playbook for all devices. It is described in the

ansible/playbooks/site.yaml file.

Here is a shortened version:

- hosts: adm-gateway:!done strategy: mitogen_linear roles: - blade.linux - blade.adm-gateway - done - hosts: os-linux:!done strategy: mitogen_linear roles: - blade.linux - done - hosts: os-junos:!done gather_facts: false roles: - blade.junos - done - hosts: os-opengear:!done gather_facts: false roles: - blade.opengear - done - hosts: none:!done gather_facts: false roles: - blade.none - done

A host executes only one of the play. For example, a Junos device

executes the blade.junos role. Once a play has been executed, the

device is added to the done group and the other plays are skipped.

The playbook can be executed with the configuration files generated by

the GitLab CI using the ./run-ansible-gitlab command. This is a

wrapper around Docker and the ansible-playbook command and it

accepts the same arguments. To deploy the configuration on the edge

devices for the SK1 datacenter in “check” mode, we use:

$ ./run-ansible-gitlab playbooks/site.yaml --limit='edge:&location-sk1' --diff --check […] PLAY RECAP ************************************************************* edge1.sk1.blade-group.net : ok=6 changed=0 unreachable=0 failed=0 skipped=3 rescued=0 ignored=0 edge2.sk1.blade-group.net : ok=5 changed=0 unreachable=0 failed=0 skipped=1 rescued=0 ignored=0

We have some rules when writing roles:

--checkmust detect if a change is needed;--diffmust provide a visualization of the planned changes;--checkand--diffmust not display anything if there is nothing to change;- writing a custom module tailored to our needs is a valid solution;

- the whole device configuration is managed;7

- secrets must be stored in Vault;

- templates should be avoided as we have Jerikan for that; and

- avoid duplication and reuse tasks.8

We avoid using collections from Ansible Galaxy, the exception

being collections to connect and interact with vendor devices, like

cisco.iosxr collection. The quality of Ansible

Galaxy collections is quite random and it is an additional

maintenance burden. It seems better to write roles tailored to our

needs. The collections we use are in

ci/ansible/ansible-galaxy.yaml. We use

Mitogen to get a 10× speedup on Ansible executions on Linux

hosts.

We also have a few playbooks for operational purpose: upgrading the OS version, isolate an edge router, etc. We were also planning on how to add operational checks in roles: are all the BGP sessions up? They could have been used to validate a deployment—and rollback if there is an issue.

Currently, our playbooks are run from our laptops. To keep tabs, we

are using ARA. A weekly dry-run on devices in the in-sync group

also provides a dashboard on which devices we need to run Ansible

on.

Configuration data and templates#

Jerikan ships with pre-populated data and templates matching the configuration of our USSFO03 and SK1 datacenters. They do not exist anymore but, we promise, all this was used in production back in the days! 😢

Notably, you can find the configuration for:

- Our edge routers

- Some are running on Junos, like edge2.ussfo03, the others on IOS XR, like edge1.sk1. The implemented functionalities are similar in both cases and we could swap one for the other. It includes the BGP configuration for transits, peerings, and IX as well as the associated BGP policies. PeeringDB is queried to get the maximum number of prefixes to accept for peerings. bgpq3 and a containerized IRRd help to filter received routes. A firewall is added to protect the routing engine. Both IPv4 and IPv6 are configured.

- Our BGP-based fabric

- BGP is used inside the datacenter9 and is extended on bare-metal hosts. The configuration is automatically derived from the device location and the port number.10 Top-of-the-rack devices are using passive BGP sessions for ports towards servers. They are also serving a provisioning network to let them boot using DHCP and PXE. They also act as a DHCP server. The design is multivendor. Some devices are running Cumulus Linux, like to1-p1.ussfo03, while some others are running Junos, like to1-p2.ussfo03.

- Our out-of-band fabric

- We are using Cisco Catalyst 2960 switches to build an L2 out-of-band network. To provide redundancy and saving a few bucks on wiring, we build small loops and run the spanning-tree protocol. See ob1-p1.ussfo03. It is redundantly connected to our gateway servers. We also use OpenGear devices for console access. See con1-n1.ussfo03

- Our administrative gateways

- These Linux servers have multiple purposes: SSH jump boxes, rescue connection, direct access to the out-of-band network, zero-touch provisioning of network devices,11 Internet access for management flows, centralization of the console servers using Conserver, and API for autoconfiguration of BGP sessions for bare-metal servers. They are the first servers installed in a new datacenter and are used to provision everything else. Check both the generated files and the associated Ansible tasks.

Update (2021-10)

I gave a talk about Jerikan and Ansible during FRnOG #34. It is essentially the same as this article.

-

Ansible does not even provide a line number when there is an error in a template. You may need to find the problem by bisecting.

↩︎$ ansible --version ansible 2.10.8 […] $ cat test.j2 Hello {{ name }}! $ ansible all -i localhost, \ > --connection=local \ > -m template \ > -a "src=test.j2 dest=test.txt" localhost | FAILED! => { "changed": false, "msg": "AnsibleUndefinedVariable: 'name' is undefined" }

-

You may recognize the same concepts as in Hiera, the hierarchical key-value store from Puppet. At first, we were using Jerakia, a similar independent store exposing an HTTP REST interface. However, the lookup overhead is too large for our use. Jerikan implements the same functionality within a Python function. ↩︎

-

This file could be extended to specify a schema to validate the source of truth and detect errors earlier. It could also provide default values to keep templates from interpreting incomplete structures. Yamale could be a starting point for such a feature. ↩︎

-

The list of available filters is mangled inside

jerikan/jinja.py. This is a remain of the fact we do not maintain Jerikan as a standalone software. ↩︎ -

This is a bit confusing: we have a

store()filter and astore()function. With Jinja2, filters and functions live in two different namespaces. ↩︎ -

We are using a fork with some modifications to be able to validate our configurations and exposing an HTTP service to reduce the time spent on each configuration check. ↩︎

-

There is a trend in network automation to automate a configuration subset, for example by having a playbook to create a new BGP session. We believe this is wrong: with time, your configuration will get out-of-sync with its expected state, notably hand-made changes will be left undetected. ↩︎

-

See

ansible/roles/blade.linux/tasks/firewall.yamlandansible/roles/blade.linux/tasks/interfaces.yaml. They are meant to be called when needed, usingimport_role. ↩︎ -

We also have some datacenters using BGP EVPN VXLAN at medium-scale using Juniper devices. As they are still in production today, we didn’t include this feature but we may publish it in the future. ↩︎

-

In retrospect, this may not be a good idea unless you are pretty sure everything is uniform (number of switches for each layer, number of ports). This was not our case. We now think it is a better idea to assign a prefix to each device and write it in the source of truth. ↩︎

-

Non-linux based devices are upgraded and configured unattended. Cumulus Linux devices are automatically upgraded on install but the final configuration has to be pushed using Ansible: we didn’t want to duplicate the configuration process using another tool. ↩︎