Akvorado release 2.0

Vincent Bernat

Akvorado 2.0 was released today! Akvorado collects network flows with IPFIX and sFlow. It enriches flows and stores them in a ClickHouse database. Users can browse the data through a web console. This release introduces an important architectural change and other smaller improvements. Let’s dive in! 🤿

$ git diff --shortstat v1.11.5 493 files changed, 25015 insertions(+), 21135 deletions(-)

New “outlet” service#

The major change in Akvorado 2.0 is splitting the inlet service into two parts: the inlet and the outlet. Previously, the inlet handled all flow processing: receiving, decoding, and enrichment. Flows were then sent to Kafka for storage in ClickHouse:

Network flows reach the inlet service using UDP, an unreliable protocol. The inlet must process them fast enough to avoid losing packets. To handle a high number of flows, the inlet spawns several sets of workers to receive flows, fetch metadata, and assemble enriched flows for Kafka. Many configuration options existed for scaling, which increased complexity for users. The code needed to avoid blocking at any cost, making the processing pipeline complex and sometimes unreliable, particularly the BMP receiver.1 Adding new features became difficult without making the problem worse.2

In Akvorado 2.0, the inlet receives flows and pushes them to Kafka without decoding them. The new outlet service handles the remaining tasks:

This change goes beyond a simple split:3 the outlet now reads flows from Kafka and pushes them to ClickHouse, two tasks that Akvorado did not handle before. Flows are heavily batched to increase efficiency and reduce the load on ClickHouse using ch-go, a low-level Go client for ClickHouse. When batches are too small, asynchronous inserts are used (e20645). The number of outlet workers scales dynamically (e5a625) based on the target batch size and latency (50,000 flows and 5 seconds by default).

This new architecture also allows us to simplify and optimize the code. The

outlet fetches metadata synchronously (e20645). The BMP component becomes

simpler by removing cooperative multitasking (3b9486). Reusing the same

RawFlow object to decode protobuf-encoded flows from Kafka reduces pressure on

the garbage collector (8b580f).

The effect on Akvorado’s overall performance was somewhat uncertain, but a user reported 35% lower CPU usage after migrating from the previous version, plus resolution of the long-standing BMP component issue. 🥳

Other changes#

This new version includes many miscellaneous changes, such as completion for source and destination ports (f92d2e), and automatic restart of the orchestrator service (0f72ff) when configuration changes to avoid a common pitfall for newcomers.

Let’s focus on some key areas for this release: observability, documentation, CI, Docker, Go, and JavaScript.

Observability#

Akvorado exposes metrics to provide visibility into the processing pipeline and

help troubleshoot issues. These are available through Prometheus HTTP metrics

endpoints, such as /api/v0/inlet/metrics. With the introduction

of the outlet, many metrics moved. Some were also renamed (4c0b15) to match

Prometheus best practices. Kafka consumer lag was added as a new metric

(e3a778).

If you do not have your own observability stack, the Docker Compose setup shipped with Akvorado provides one. You can enable it by activating the profiles introduced for this purpose (529a8f).

The prometheus profile ships Prometheus to store metrics and Alloy

to collect them (2b3c46, f81299, and 8eb7cd). Redis and Kafka

metrics are collected through the exporter bundled with Alloy (560113).

Other metrics are exposed using Prometheus metrics endpoints and are

automatically fetched by Alloy with the help of some Docker labels, similar to

what is done to configure Traefik. cAdvisor was also added (83d855) to

provide some container-related metrics.

The loki profile ships Loki to store logs (45c684). While Alloy

can collect and ship logs to Loki, its parsing abilities are limited: I could

not find a way to preserve all metadata associated with structured logs produced

by many applications, including Akvorado. Vector replaces Alloy (95e201)

and features a domain-specific language, VRL, to transform logs. Annoyingly,

Vector currently cannot retrieve Docker logs from before it was

started.

Finally, the grafana profile ships Grafana, but the shipped dashboards are

broken. This is planned for a future version.

Documentation#

The Docker Compose setup provided by Akvorado makes it easy to get the web interface up and running quickly. However, Akvorado requires a few mandatory steps to be functional. It ships with comprehensive documentation, including a chapter about troubleshooting problems. I hoped this documentation would reduce the support burden. It is difficult to know if it works. Happy users rarely report their success, while some users open discussions asking for help without reading much of the documentation.

In this release, the documentation was significantly improved.

$ git diff --shortstat v1.11.5 -- console/data/docs 10 files changed, 1873 insertions(+), 1203 deletions(-)

The documentation was updated (fc1028) to match Akvorado’s new architecture. The troubleshooting section was rewritten (17a272). Instructions on how to improve ClickHouse performance when upgrading from versions earlier than 1.10.0 was added (5f1e9a). An LLM proofread the entire content (06e3f3). Developer-focused documentation was also improved (548bbb, e41bae, and 871fc5).

From a usability perspective, table of content sections are now collapsable (c142e5). Admonitions help draw user attention to important points (8ac894).

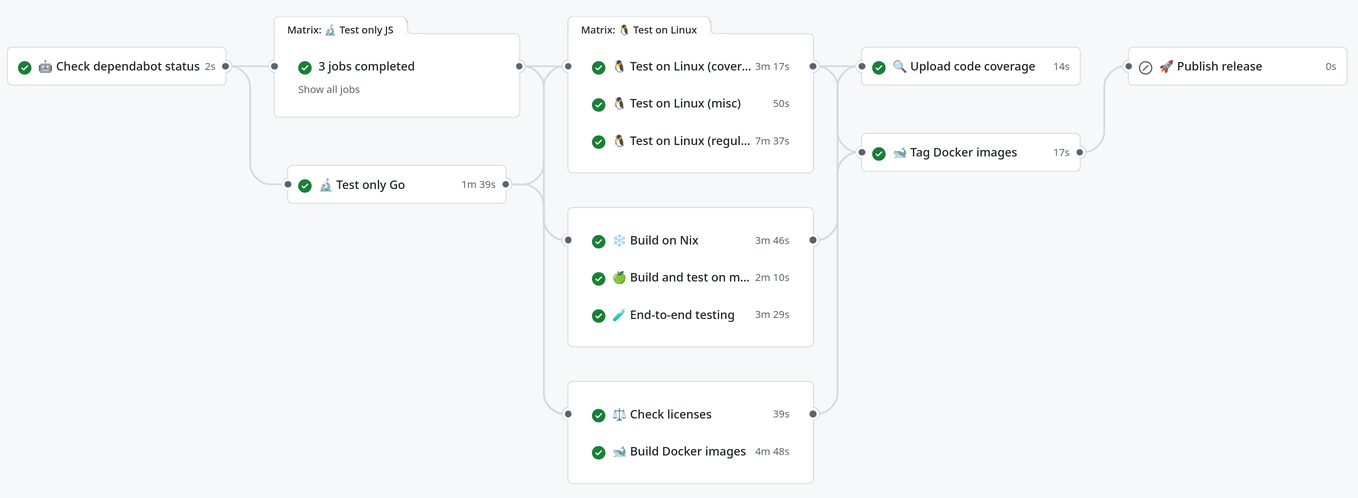

Continuous integration#

This release includes efforts to speed up continuous integration on GitHub. Coverage and race tests run in parallel (6af216 and fa9e48). The Docker image builds during the tests but gets tagged only after they succeed (8b0dce).

End-to-end tests (883e19) ensure the shipped Docker Compose setup works as expected. Hurl runs tests on various HTTP endpoints, particularly to verify metrics (42679b and 169fa9). For example:

## Test inlet has received NetFlow flows GET http://127.0.0.1:8080/prometheus/api/v1/query [Query] query: sum(akvorado_inlet_flow_input_udp_packets_total{job="akvorado-inlet",listener=":2055"}) HTTP 200 [Captures] inlet_receivedflows: jsonpath "$.data.result[0].value[1]" toInt [Asserts] variable "inlet_receivedflows" > 10 ## Test inlet has sent them to Kafka GET http://127.0.0.1:8080/prometheus/api/v1/query [Query] query: sum(akvorado_inlet_kafka_sent_messages_total{job="akvorado-inlet"}) HTTP 200 [Captures] inlet_sentflows: jsonpath "$.data.result[0].value[1]" toInt [Asserts] variable "inlet_sentflows" >= {{ inlet_receivedflows }}

Docker#

Akvorado ships with a comprehensive Docker Compose setup to help users get started quickly. It ensures a consistent deployment, eliminating many configuration-related issues. It also serves as a living documentation of the complete architecture.

This release brings some small enhancements around Docker:

- an example to configure Traefik for TLS with Let’s Encrypt (1a27bb),

- HTTP compression (bee9a5), and

- support of IPv6 (a74a41).

Previously, many Docker images were pulled from the Bitnami Containers library. However, VMWare acquired Bitnami in 2019 and Broadcom acquired VMWare in 2023. As a result, Bitnami images were deprecated in less than a month. This was not really a surprise4. Previous versions of Akvorado had already started moving away from them. In this release, the Apache project’s Kafka image replaces the Bitnami one (1eb382). Thanks to the switch to KRaft mode, Zookeeper is no longer needed (0a2ea1, 8a49ca, and f65d20).

Akvorado’s Docker images were previously compiled with Nix. However, building

AArch64 images on x86-64 is slow because it relies on QEMU userland emulation.

The updated Dockerfile uses multi-stage and multi-platform builds: one

stage builds the JavaScript part on the host platform, one stage builds the Go

part cross-compiled on the host platform, and the final stage assembles the

image on top of a slim distroless image (268e95 and d526ca).

# This is a simplified version FROM --platform=$BUILDPLATFORM node:20-alpine AS build-js RUN apk add --no-cache make WORKDIR /build COPY console/frontend console/frontend COPY Makefile . RUN make console/data/frontend FROM --platform=$BUILDPLATFORM golang:alpine AS build-go RUN apk add --no-cache make curl zip WORKDIR /build COPY . . COPY --from=build-js /build/console/data/frontend console/data/frontend RUN go mod download RUN make all-indep ARG TARGETOS TARGETARCH TARGETVARIANT VERSION RUN make FROM gcr.io/distroless/static:latest COPY --from=build-go /build/bin/akvorado /usr/local/bin/akvorado ENTRYPOINT [ "/usr/local/bin/akvorado" ]

When building for multiple platforms with --platform

linux/amd64,linux/arm64,linux/arm/v7, the build steps until the highlighted

line execute only once for all platforms. This significantly speeds up the

build. 🚅

Akvorado now ships Docker images for these platforms: linux/amd64,

linux/amd64/v3, linux/arm64, and linux/arm/v7. When requesting

ghcr.io/akvorado/akvorado, Docker selects the best image for the current CPU.

On x86-64, there are two choices. If your CPU is recent enough, Docker

downloads linux/amd64/v3. This version contains additional optimizations and

should run faster than the linux/amd64 version. It would be interesting to

ship an image for linux/arm64/v8.2, but Docker does not support the same

mechanism for AArch64 yet (792808).

Go#

This release includes many changes related to Go but not visible to the users.

Toolchain#

In the past, Akvorado supported the two latest Go versions, preventing immediate

use of the latest enhancements. The goal was to allow users of stable

distributions to use Go versions shipped with their distribution to compile

Akvorado. However, this became frustrating when interesting features, like go

tool, were released. Akvorado 2.0 requires Go 1.25 (77306d) but can be

compiled with older toolchains by automatically downloading a newer one

(94fb1c).5 Users can still override GOTOOLCHAIN to revert this

decision. The recommended toolchain updates weekly through CI to ensure we get

the latest minor release (5b11ec). This change also simplifies updates to

newer versions: only go.mod needs updating.

Thanks to this change, Akvorado now uses wg.Go() (77306d) and I have

started converting some unit tests to the new test/synctest package

(bd787e, 7016d8, and 159085).

Testing#

When testing equality, I use a helper function Diff() to display the

differences when it fails:

got := input.Keys() expected := []int{1, 2, 3} if diff := helpers.Diff(got, expected); diff != "" { t.Fatalf("Keys() (-got, +want):\n%s", diff) }

This function uses kylelemons/godebug. This package is

no longer maintained and has some shortcomings: for example, by default, it does

not compare struct private fields, which may cause unexpectedly successful

tests. I replaced it with google/go-cmp, which is stricter

and has better output (e2f1df).

Another package for Kafka#

Another change is the switch from Sarama to franz-go to interact with Kafka (756e4a and 2d26c5). The main motivation for this change is to get a better concurrency model. Sarama heavily relies on channels and it is difficult to understand the lifecycle of an object handed to this package. franz-go uses a more modern approach with callbacks6 that is both more performant and easier to understand. It also ships with a package to spawn fake Kafka broker clusters, which is more convenient than the mocking functions provided by Sarama.

Improved routing table for BMP#

To store its routing table, the BMP component used

kentik/patricia, an implementation of a patricia tree

focused on reducing garbage collection pressure.

gaissmai/bart is a more recent alternative using an

adaptation of Donald Kunth’s ART algorithm that promises better

performance and delivers it: 90% faster lookups and 27% faster

insertions (92ee2e and fdb65c).

Unlike kentik/patricia, gaissmai/bart does not help efficiently store values

attached to each prefix. I adapted the same approach as kentik/patricia to

store route lists for each prefix: store a 32-bit index for each prefix, and use

it to build a 64-bit index for looking up routes in a map. This leverages Go’s

efficient map structure.

gaissmai/bart also supports a lockless routing table version, but this is not

simple because we would need to extend this to the map storing the routes and to

the interning mechanism. I also attempted to use Go’s new unique package to

replace the intern package included in Akvorado, but performance was

worse.7

Miscellaneous#

Previous versions of Akvorado were using a custom Protobuf encoder for

performance and flexibility. With the introduction of the outlet service,

Akvorado only needs a simple static schema, so this code was removed. However,

it is possible to enhance performance with

planetscale/vtprotobuf (e49a74, and 8b580f).

Moreover, the dependency on protoc, a C++ program, was somewhat annoying.

Therefore, Akvorado now uses buf, written in Go, to convert a Protobuf

schema into Go code (f4c879).

Another small optimization to reduce the size of the Akvorado binary by 10 MB was to compress the static assets embedded in Akvorado in a ZIP file. It includes the ASN database, as well as the SVG images for the documentation. A small layer of code makes this change transparent (b1d638 and e69b91).

JavaScript#

Recently, two large supply-chain attacks hit the JavaScript ecosystem: one

affecting the popular packages chalk and debug and another

impacting the popular package @ctrl/tinycolor. These attacks also

exist in other ecosystems, but JavaScript is a prime target due to heavy use of

small third-party dependencies. The previous version of Akvorado relied on 653

dependencies.

npm-run-all was removed (3424e8, 132 dependencies). patch-package was

removed (625805 and e85ff0, 69 dependencies) by moving missing

TypeScript definitions to env.d.ts. eslint was replaced with oxlint, a

linter written in Rust (97fd8c, 125 dependencies, including the plugins).

I switched from npm to Pnpm, an alternative package manager (fce383).

Pnpm does not run install scripts by default8 and prevents installing

packages that are too recent. It is also significantly faster.9 Node.js

does not ship Pnpm but it ships Corepack, which allows us to use Pnpm

without installing it. Pnpm can also list licenses used by each dependency,

removing the need for license-compliance (a35ca8, 42 dependencies).

For additional speed improvements, beyond switching to Pnpm and Oxlint, Vite was replaced with its faster Rolldown version (463827).

After these changes, Akvorado “only” pulls 225 dependencies. 😱

Next steps#

I would like to land three features in the next version of Akvorado:

-

Add Grafana dashboards to complete the observability stack. See issue #1906 for details.

-

Integrate OVH’s Grafana plugin by providing a stable API for such integrations. Akvorado’s web console would still be useful for browsing results, but if you want to build and share dashboards, you should switch to Grafana. See issue #1895.

-

Move some work currently done in ClickHouse (custom dictionaries, GeoIP and IP enrichment) back into the outlet service. This should give more flexibility for adding features like the one requested in issue #1030.

I started working on splitting the inlet into two parts more than one year ago. I found more motivation in recent months, partly thanks to Claude Code, which I used as a rubber duck. Almost none of the produced code was kept:10 it is like an intern who does not learn. 🦆

-

Many attempts were made to make the BMP component both performant and not blocking. See for example PR #254, PR #255, and PR #278. Despite these efforts, this component remained problematic for most users. See issue #1461 as an example. ↩︎

-

Some features have been pushed to ClickHouse to avoid the processing cost in the inlet. See for example PR #1059. ↩︎

-

This is the biggest commit:

↩︎$ git show --shortstat ac68c5970e2c | tail -1 231 files changed, 6474 insertions(+), 3877 deletions(-)

-

Broadcom is known for its user-hostile moves. Look at what happened with VMWare. ↩︎

-

As a Debian developer, I dislike these mechanisms that circumvent the distribution package manager. The final straw came when Go 1.25 spent one month in the Debian NEW queue, an arbitrary mechanism I don’t like at all. ↩︎

-

In the early years of Go, channels were heavily promoted. Sarama was designed during this period. A few years later, a more nuanced approach emerged. See notably “Go channels are bad and you should feel bad.” ↩︎

-

This should be investigated further, but my theory is that the

internpackage uses 32-bit integers, whileuniqueuses 64-bit pointers. See commit 74e5ac. ↩︎ -

An even faster alternative is Bun, but it is less available. ↩︎

-

The exceptions are part of the code for the admonition blocks, the code for collapsing the table of content, and part of the documentation. ↩︎