TLS termination: stunnel, nginx & stud, round 2

Vincent Bernat

A month ago, I published an article on the compared performance of stunnel, nginx and stud as TLS terminators. The conclusion was to use stud on a 64-bit system, with session caching and AES. stunnel was unable to scale properly and nginx exhibited important latency issues. I got constructive comments on many aspects. Therefore, here is the second round. The protagonists are the same but both the conditions and the conclusions have changed a bit.

Update (2016-01)

stud is now unmaintained. It has been forked by the Varnish team and is now called hitch. However, I would rather consider HAProxy. It now features TLS support, supplied by one of the major contributor of stud.

Benchmarks#

The hardware platform remains unchanged:

- HP DL 380 G7

- 6 GiB RAM

- 2 × L5630 @ 2.13GHz (8 cores), hyperthreading disabled

- 2 × Intel 82576 NIC

- Spirent Avalanche 2900 appliance

We focus on the following environment:

- Ubuntu Lucid, featuring a patched version of OpenSSL 0.9.8k with AES-NI builtin and enabled by default

- 2.6.39 kernel (250 HZ)

- 64-bit

- 1 core for HAProxy, 2 cores for network cards,1 4 cores for TLS, 1 core for the system

- system limit of 100,000 file descriptors per process

On the TLS side, the main components are:

- 2048-bit certificates

- AES128-SHA cipher suite

- 8 HTTP/1.0 requests per client

- HTTP response payload of 1024 bytes

If you are interested in different conditions (32-bit, number of cores, 1024-bit certificates, proportion of session reuses), have a look at the previous article whose results on the matter are still valid. I only consider 2048-bit certificates because most certificates issued today are 2048-bit. In SP800-57, NIST advises that 1024-bit RSA keys will no longer be viable after 2010 and advises moving to 2048-bit RSA keys:

If information is initially signed in 2009 and needs to remain secure for a maximum of ten years (i.e., from 2009 to 2019), a 1024-bit RSA key would not provide sufficient protection between 2011 and 2019 and, therefore, it is not recommended that 1024-bit RSA be used in this case.

And the protagonists are:

- stud, @e207dbc5a3, with session cache enabled for 20,000 sessions, listen backlog of 1000 connections;

- stunnel 4.44, configured with

--disable-libwrap --with-threads=pthread(configuration); and - nginx 1.1.4, configured with

--with-http_ssl_module(configuration).

Update (2011-10)

Configuration of nginx has been updated to

switch to off both multi_accept and accept_mutex thanks to the

feedback of Maxim Dounin on latency issues that were present in

the previous article (and still present in the early version

of this article).

HAProxy (configuration) is used for stunnel and stud to add load-balancing.

For each test, we want to maximize the number of HTTP transactions per second (TPS) while minimizing latency and unsuccessful transactions. The maximum number of allowed failed transactions is set at 1 transaction out of 1000. The maximum allowed average latency is 100 ms.2

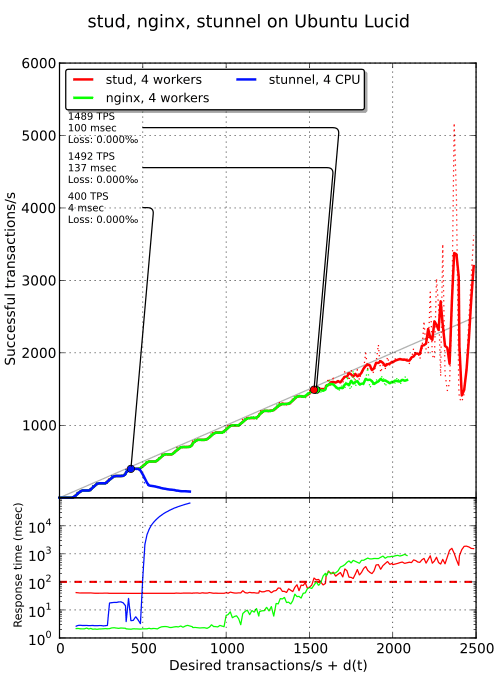

Control case#

Below is the first comparison between stud, nginx and stunnel on plain Ubuntu Lucid. Like in previous benchs, stunnel is not able to scale, with a maximum of 400 TPS. However, early latency issues of nginx has been solved, thanks to the change in configuration. stud and nginx gets similar performance but, as you can see on the bottom plot (whose scale is logarithmic) stud starts with an initial latency of 40 ms.

This is pretty annoying. I have tried some micro optimization to solve this issue but without luck. If latency matters for you, stick with stunnel or nginx (or find where the problem lies in stud).

Update (2011-10)

After reporting this issue, a fix has been commited. The culprit was Nagle’s algorithm which improves bandwidth at the expense of latency. With the fix, performances are mostly the same but there is no latency penalty any more.

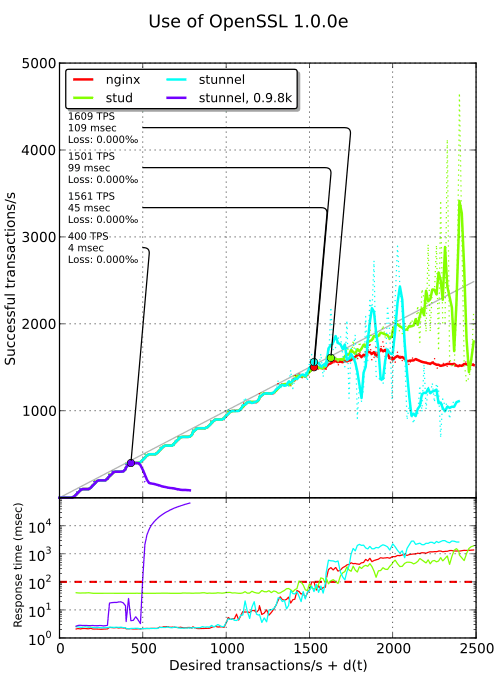

OpenSSL 1.0.0e#

When I published the first round of benchmarks, Michał Trojnara was dubious about the bad results of stunnel. He told me on Twitter:

I solved it! You compiled stunnel against a 4 years old OpenSSL with MT-unsafe

SSL_accept. Upgrade OpenSSL and rebuild stunnel. This bug was fixed in OpenSSL 1.0.0b. I implemented a (slow) workaround, so stunnel doesn’t crash with earlier versions of OpenSSL.

Therefore, I backported Ubuntu Oneiric OpenSSL 1.0.0e to Ubuntu Lucid3 and, as you can see yourself on the plot below, there is a huge performance boost for stunnel: about 400%! stud features a slight improvement too (about 8%) but still starts with a latency of 40 ms while nginx does not improve.

Intel Accelerator Engine#

Most Linux distributions are still using OpenSSL 0.9.8. For example, Debian Squeeze ships with OpenSSL 0.9.8o, Ubuntu Lucid with 0.9.8k and RHEL 5.7 with 0.9.8e. Therefore, they are not eligible to recent performance improvements. To solve this, Intel released Intel Accelerator Engine, which also brings, among other things, AES-NI support:

This is essentially a collection of assembler modules from development OpenSSL branch, packed together as a standalone engine. “Standalone” means that it can be compiled outside OpenSSL source tree without patching the latter. Idea is to provide a way to utilize new code in already released OpenSSL versions constrained by support and release policies limiting their evolvement to genuine bug fixes.

Alas, in my tests, there was no improvement compared to OpenSSL 1.0.0e (already patched with AES-NI support).

stunnel comes with out-of-the-box support for additional

engines. Just add something like this to your stunnel.conf:

engine=dynamic engineCtrl=SO_PATH:/WOO/intel-accel-1.4/libintel-accel.so engineCtrl=ID:intel-accel engineCtrl=LIST_ADD:1 engineCtrl=LOAD engineCtrl=INIT

For stud, you should grab

support for engine selection from GitHub and for

nginx, specify the engine with ssl_engine.

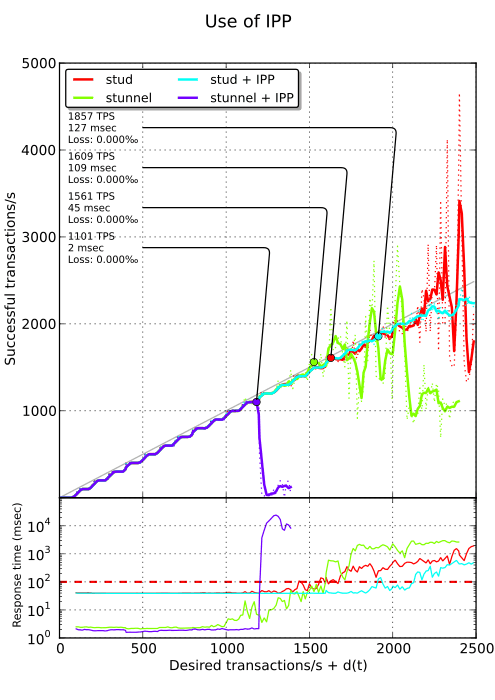

Intel Performance Primitives#

In his great article “Accelerated SSL,” Simon Kuhn shows huge performance improvements by using Intel’s IPP library, a library aimed at leveraging power of multi-core processors and including some cryptographic primitives and a patch to make use of them in OpenSSL. Unfortunately, this library is not free, not even as in “free beer.” The patch provided by Intel only applies on OpenSSL 0.9.8j. Simon updated it for OpenSSL 1.0.0d. It also applies with a slight modification to OpenSSL 1.0.0e. Look at Simon’s article for instructions on how to compile OpenSSL with IPP.

Simon measured 220% improvement on RSA 1024-bit private key operations by using this library. Unfortunately, we don’t get such a performance burst with stud which exhibits a 15% improvement (but remember that private key operations only happen once every 8 transactions thanks to session reuse). Worst, with stunnel, performance is reduced by one third and nginx just segfaults. I think such a library is risky to use in production.

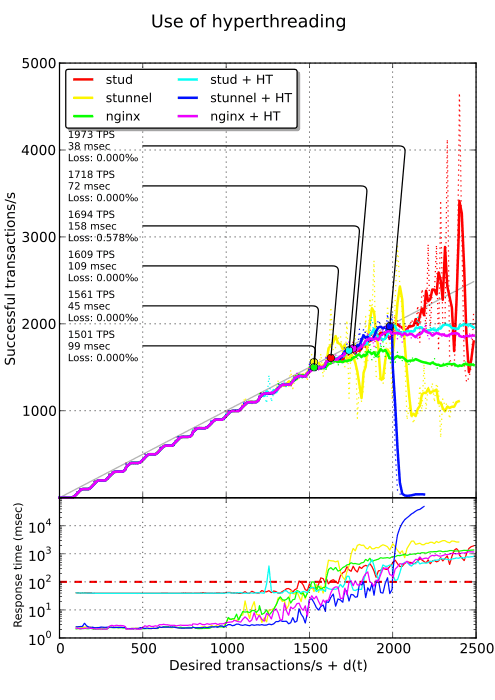

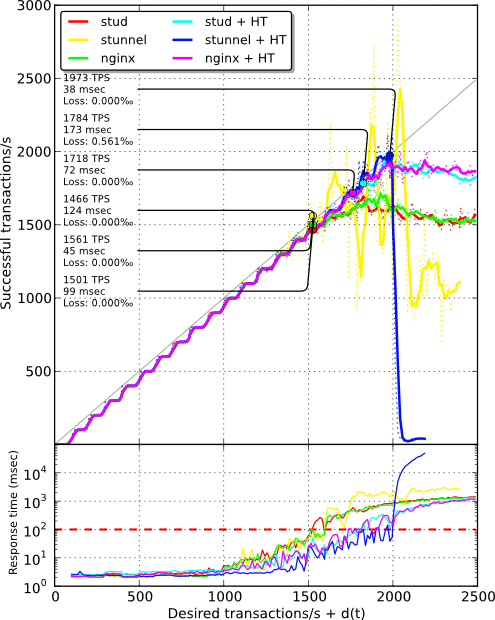

Hyperthreading#

During the previous tests, only half the capacity of each core were used. For stud, adding more workers per core only slightly improves this situation (less than 10% with 4 workers per core). Therefore, I thought using hyperthreading could rectify the situation here. In this case, stud, stunnel and nginx are allocated 8 “virtual” cores instead of 4. stud only got a small improvement of 5%, nginx a moderate improvement of 11% while stunnel got a significant improvement of 26%.

Conclusion#

Here is a quick recap of the maximum TPS reached on each tests (while keeping average response time below 100 ms). All tests were run on 4 physical cores with 2048-bit certificates, AES128-SHA cipher suite. Each client makes 8 HTTP requests and reuse the first TLS session.

| Context | nginx 1.1.4 | stunnel 4.44 | stud @e207dbc5a3 |

|---|---|---|---|

| OpenSSL 0.9.8k | 1489 TPS | 400 TPS | 1492 TPS |

| OpenSSL 1.0.0e | 1501 TPS | 1561 TPS | 1609 TPS |

| OpenSSL 1.0.0e + IPP | - | 1101 TPS | 1857 TPS |

| OpenSSL 1.0.0e + HT | 1718 TPS | 1973 TPS | 1694 TPS |

I also would like to stress that below 1500 TPS, stunnel adds an overhead of a few milliseconds while stud adds 40 milliseconds. With OpenSSL 1.0.0e and hyperthreading enabled, stunnel has both good performance and low latency on low loads to moderate loads. You may just want to limit the number of allowed connections to avoid collapsing on high loads. While in the previous round, stud was the champion and stunnel lagged behind, the situation is now reversed : stunnel is on top!

Update (2011-12)

As said earlier, the latency issue with stud has been solved. Performances remain mostly the same. Here is how the latest plot looks like after the fix:

-

HAProxy and network cards don’t need dedicated core for this kind of load. However, if you also want to handle non-TLS traffic, these dedicated cores can get busy. ↩︎

-

The real condition is more complex. Each point is considered valid if the corresponding average latency is less than 100 ms or if the average on the next 8 points is less than 100 ms. Temporary latency bursts are therefore allowed. We are not so forgiveful with failed transactions. ↩︎

-

This is pretty straightforward: I just dropped multiarch support. I chose to use Ubuntu version of OpenSSL instead of upstream because there are some interesting patches, like automatic support of AES-NI. ↩︎