Mobile browsing & content optimization

Vincent Bernat

Since my laptop features WWAN connectivity, I purchased a 3G mobile broadband plan from SFR, a French mobile phone company. I took the cheapest one available: 250 MiB per month for €10.

Optimizing transparent proxy by SFR#

While browsing, I was curious about the fact that there were multiple

accesses to non-public IP, like 10.141.0.4. I was browsing the mobile

version of Le Monde, a French daily newspaper. I enabled

Chromium developer tools and watched what happened in the “Network”

tab. All images were served from IP like 10.141.0.4. Looking at the

HTML source code, I discovered that <img> tags were rewritten to

point to these images. The smaller ones were identical to the original

one but the larger ones (more than 50 KiB) were of reduced quality (and

therefore reduced size).

<img> tags were not the only ones to be rewritten. <style> and

<script> tags that were pointing to external resources (with a src

attribute) were also rewritten to include the CSS or the JavaScript

inline. Before:

<script src="http://s1.lemde.fr/js/lib/jquery/1.5.1/jquery.js"></script>

After:

<script oldSrc="http://s1.lemde.fr/js/lib/jquery/1.5.1/jquery.js" NG="replaced"> /*! * jQuery JavaScript Library v1.5.1 * * Copyright 2011, John Resig * Dual licensed under the MIT or GPL Version 2 licenses. * http://jquery.org/license * […] */ </script>

This means that each page that includes jQuery, a popular JavaScript library, as an external resource, will be turned into a page that includes the whole jQuery code which is about 85 KiB. Each page will weigh more than 85 KiB instead of relying on an external and cacheable resource.

Nowadays, most browsers support HTTP keep-alive which allows one to serve multiple resources into a single request. There are two cases:

-

If the resource is served from the same server as the HTML page, keep-alive would enable us to get almost the same result without hindering the cache: the browser would first request the HTML page, then the JavaScript resource into the same TCP connection. There is a small penalty of about 100 ms to let the browser request the second resource. The browser may decide to open another connection because the initial document is not loaded yet. Some browsers may even negotiate this additional connection before needing it. In this case, it can use this idle connection at no cost. Otherwise, we may fall into the second case.

-

If the resource is served from another server (like in the example above) or if the browser does not have an idle connection ready for reuse, it would download the resource in parallel with the help of another connection. It will only cost about 300 ms of additional time due to the establishment of a new TCP connection with the server. These 300 ms are a very small price to pay compared to the 2 seconds needed to download 85 KiB for each page. Moreover, other static resources will likely be downloaded from the same server and therefore, this is 300 ms the first time and about 100 ms for additional resources (round-trip time for a new HTTP request into the same TCP connection).

Update (2011-06)

In a previous version of this article, I confused pipelining and keep-alive mechanisms. While keep-alive allows one to reuse an existing connection to download a new resource, only one request at a time by connection could be issued. Pipelining allows one to push additional requests in the same TCP connection without waiting for answers. As noted by Éric Daspet in a comment, pipelining is disabled for most browsers. However, keep-alive is well supported.

Searching on the web for oldSrc and NG="replaced", I find very little

information about what is used. It seems that such a feature may also

break some scripts, like TinyMCE, trying to get additional resources

relative to the path where the script is installed. It appears that

SFR is using a product from Flash Networks called

Harmony Gateway. I have asked SFR more info about this and

if it was possible to disable the use of this feature but got no

answer so far.

I have retried a bit later and there was no rewriting for JavaScript

and CSS. Images were still degraded and served by a special

server. The next day, my requests did not travel through a transparent

proxy anymore (no more Via header in answers). Each try was done at

a different location. The proxies may be installed at some locations

only and configured differently. Another explanation is that SFR

silently disabled this functionality for my account.

If it was done correctly, such an accelerator service could be proposed as an opt-out option since most users would appreciate faster page downloads. If you care about network neutrality, you can disable this option, but it needs to be properly advertised, for example as a free option in your mobile plan.

Setting up our own optimizing proxy#

Google provides mod_pagespeed as a solution to optimize

a website on the fly. This is an Apache module. It is targeted at

optimizing your website but since Apache can act as a regular

proxy, it seems possible to

enable mod_pagespeed for any website.

That’s easy to set up if you don’t mind using a precompiled version of

mod_pagespeed. Download mod_pagespeed. I have downloaded the 64-bit

version for Debian.

# touch /etc/default/mod-pagespeed # dpkg -i ~/download/mod-pagespeed-beta_current_amd64.deb Selecting previously deselected package mod-pagespeed-beta. (Reading database ... 277699 files and directories currently installed.) Unpacking mod-pagespeed-beta (from .../mod-pagespeed-beta_current_amd64.deb) ... dpkg: dependency problems prevent configuration of mod-pagespeed-beta: mod-pagespeed-beta depends on apache2.2-common; however: Package apache2.2-common is not installed. dpkg: error processing mod-pagespeed-beta (--install): dependency problems - leaving unconfigured Errors were encountered while processing: mod-pagespeed-beta # apt-get install -f […] Unpacking apache2.2-common (from .../apache2.2-common_2.2.19-1_amd64.deb) ... Processing triggers for man-db ... Setting up apache2.2-common (2.2.19-1) ... […] # apt-get install apache2-mpm-worker […] Unpacking apache2-mpm-worker (from .../apache2-mpm-worker_2.2.19-1_amd64.deb) ... Setting up apache2-mpm-worker (2.2.19-1) ... Starting web server: apache2. # a2enmod proxy_http Considering dependency proxy for proxy_http: Enabling module proxy. Enabling module proxy_http. To activate the new configuration, you need to run: /etc/init.d/apache2 restart

Then, we modify /etc/apache2/mods-enabled/proxy.conf:

ProxyRequests On <Proxy *> AddDefaultCharset off Order deny,allow Deny from all Allow from 127.0.0.1 </Proxy>

This makes little sense to run the proxy on your laptop since you do not want to download the non-optimized content over your WWAN link. You need to run it on another server and therefore allow your laptop to access it.

Also, we need to modify /etc/apache2/mods-enabled/pagespeed.conf to

add the directive ModPagespeedDomain * to let mod_pagespeed

rewrite any page. You should restart Apache after that. Now, check if

the proxy is working:

$ curl -six http://localhost:80 http://www.lemonde.fr/ | grep ^X-Mod-Pagespeed X-Mod-Pagespeed: 0.9.17.7-716

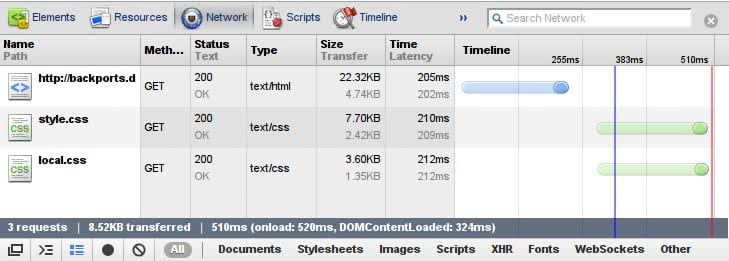

All is working! Let’s try a very simple site, like Debian Backports. It features one HTML file and two CSS files:

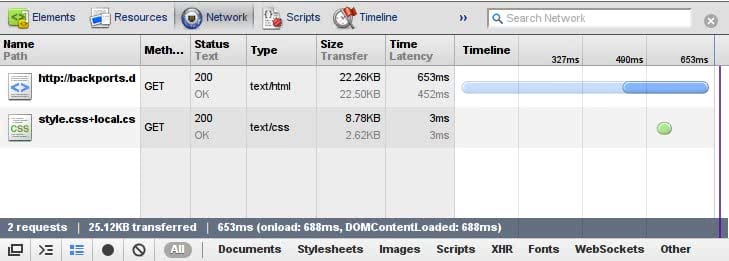

We downloaded a total of 8 KiB (compression included). Let’s use our proxy and try again:

mod_pagespeed succeeds in reducing the size of the HTML file and

combined the CSS files. Moreover, it minified the resulting CSS

file. There seems to be a bug in Chromium that displays incorrect

transfer sizes (including in the timeline representation) when using

a proxy. I have checked with Wireshark that the overall quantity

transferred was about 8 KiB. The gain is very thin here but you can

imagine that you will get better results on larger sites.

mod_pagespeed seems to handle well most sites. However, it only

optimizes pages that already are in its cache. The first time you

grab a page, you will get the unoptimized version. I do not know if it

is the expected behavior.